ARCHIVE of dtqr site (2022 through 2016)

QDAS as part of a digital research workflow (posted November 16, 2022)

We have a new article out providing an overview of how qualitative data analysis software can serve as a hub for the entire digital research workflow in the context of human resource development.

Resources for Transcription Workshop (posted October 10, 2022)

Google docs for the workshop

Google doc: What questions do you have?

Google doc: What is a transcript?

Google doc: Personalizing the workflow

For more information

Transcription: More than just words (by NVivo)

How to import automated transcripts into ATLAS 22

Dedoose series on transcription solutions:

Part 1: Updated transcription solutions for dedoosers: Low and no cost options

Part 2: Data security and privacy considerations

The CAQDAS Networking Project webinar on Transcribing for the 21st Century, with Steve Wright

Fully remote workshop on transcribing practices: October 14, 2022 (posted August 2, 2022)

Creating Transcription Workflows for Recorded Qualitative Data

Are you looking for innovative solutions to the somewhat tedious process of transcribing interviews, interactions and other recorded data? Wondering whether every single “mmhmm” and “yeah” must be transcribed, and how? Hoping that artificial intelligence platforms like Trint or otter.ai can play a role in the transcription process? Heard about auto-captioning features and want to know more?

Dr. Jessica Lester and Dr. Trena Paulus will be offering a fully remote version of this brand new workshop through Indiana University on October 14, 2022 from 9:30 am-3:30 pm EDT.

This workshop is intended for faculty, staff and graduate students, as well as those working in applied social science research contexts. Workshop content is adapted from Paulus & Lester’s (2022) book, Doing Qualitative Research in the Digital World.

This six-hour highly interactive virtual workshop will offer participants guidance for creating their own digital transcription workflow using a range of tools and spaces. The workshop will focus on:

- Drawing upon the selected research design and methodology to help make decisions around how best to transcribe audio and video recordings

- Distinguishing between and producing the most appropriate method for transcribing data (verbatim, Jeffersonian, multimodal/embodied, gisted, visual, and/or poetic)

- Highlighting the consequences of integrating the available digital tools and spaces that can be used to support both manual and automatic transcription practices.

By the end of the day, participants will have learned the various types of transcriptions and a range of digital tools and spaces for creating a robust transcription workflow. This workflow will result in a high quality and analytically useful transcript.

The day will include four 90 minute sessions with breaks in between. Participants must be able to speak and listen throughout the day in order to participate in break-out room discussions as well as whole-group mini-lectures and application activities. A lunch break will be included.

Reflections from previous workshop participants:

“Thank you to @NinaLester and @TrenaPaulus for providing a fantastic, well-structured and interactive full day workshop on conceptualizing and thinking through digital workflows when working with qualitative data. I know a ton more now than I did at 9:30am #digitalworkflows” @MoniqueYoder, Michigan State University

“I attended the previous one of these and it’s really great – really thought-provoking and full of top tips – check it out #qualitative peeps..” Dr. Christina Silver of the CAQDAS Networking Project

“Thanks for a fabulous workshop! I was singing the praises of your workshop to a few colleagues at the ADDA3 conference over the weekend.” Dr. Riki Thompson, Writing Studies and Digital Rhetoric, University of Washington Tacoma

“Thanks so much for the informative and interactive workshop on qualitative research using digital technology. We need this kind of workshops on qualitative research as the field of human resource development is growing.” Dr. Yonjoo Cho, Associate Professor of Human Resource Development, The University of Texas at Tyler

“Great workshop! I want to order your most recent book and incorporate it into a qualitative data course.” Dr. Jean Hemphill, Professor of Nursing, East Tennessee State University

“I wanted to thank you and Trena again for offering us such an amazing workshop! That was my first and best in-person workshop since I came to IU! I learned so much that I did not know before, such as using Atlas.TI to do literature review, which I will try immediately in the summer, several ways of conducting qualitative interviews, and so many software tools that I will use in the future. I also appreciate the reflection on impact on methods, materiality, outcomes, and humans. Additionally, I had the opportunity to create my digital workflow, which will be used for my Early Inquiry Project writing this summer.” Jinzhi Zhou, Doctoral Student, Indiana University

“I so enjoyed the workshop today. It was exactly what I needed. I’ve been using a case study method in my culture analysis work with law firms and other organizations. My analysis has been by hand, using Excel as my primary tool. I want to explore using maybe the combination of Zotero for lit review, Good Reader App to read and annotate, and then maybe Dedoose to help with analysis.” Dr. Denise Gaskin, RavenWork Coaching & Consulting

Creating Digital Workflows for Qualitative Research (posted July 21, 2022)

Now more than ever, technological innovations combined with the ongoing global pandemic are shaping qualitative research methods and methodologies in complex ways.

Dr. Jessica Lester and Dr. Trena Paulus will be offering, for a final time, a fully remote version of this workshop through Indiana University on September 6, 2022 from 9:30 am-3:30 pm EDT.

This workshop is intended for faculty, staff and graduate students, as well as those working in applied social science research contexts.

The highly interactive sessions will offer participants both theoretical grounding and practical guidance for developing a personalized digital workflow for qualitative research that leverages technological innovations in meaningful and reflexive ways.

Participants will be guided in:

- Critically evaluating and adopting digital tools and spaces in theoretically and methodologically grounded ways;

- Transforming one key qualitative data collection method – interviewing – into a creative and accessible data collection method via engaging with digital tools and spaces;

- Positioning qualitative data analysis software as a core component of a research workflow; and

- Examining the ethical and political consequences of harnessing digital tools and spaces within a qualitative research design.

By the end of the day, participants will have generated their own digital workflow for qualitative research studies and considered key critical appraisal questions to guide future methodological decisions.

Workshop content is adapted from Paulus & Lester’s (2022) book, Doing Qualitative Research in the Digital World.

The day will include small break-out room discussions as well as whole-group mini-lectures and application activities. We will also include regular breaks and a 30-minute lunch break.

Reflections on the Indiana University workshop (posted May 19, 2022)

We had a wonderful time on May 13, 2022, presenting Creating Digital Qualitative Research Workflows at Indiana University, hosted by the Social Science Research Commons.

It was our first in-person post-COVID workshop for faculty, staff and graduate students, which was exciting and strange.

Here is the workshop description, and we will do a virtual version of this next month.

Now more than ever, technological innovations combined with the ongoing global pandemic are shaping qualitative research methods and methodologies in complex ways. This one-day fully online interactive workshop offers participants both theoretical grounding and practical guidance for developing a personalized digital workflow for qualitative research that leverages technological innovations in meaningful and reflexive ways.

During the workshop, participants will be guided in:

- Critically evaluating and adopting digital tools and spaces in theoretically and methodologically grounded ways;

- Transforming one key qualitative data collection method – interviewing – into a creative and accessible data collection method via engaging with digital tools and spaces;

- Positioning qualitative data analysis software as a core component of a research workflow; and

- Examining the ethical and political consequences of harnessing digital tools and spaces within a qualitative research design.

By the end of the workshop, participants will have generated their own digital workflow for qualitative research studies and considered key critical appraisal questions to guide future methodological decisions. Workshop content is adapted from Paulus & Lester’s (2022) book, Doing Qualitative Research in the Digital World.

The schedule will include interactive small break-out room discussions as well as whole-group mini-lectures and application activities. We will also include regular breaks and a 30-minute lunch break.

Special issue on the future of QDAS is out! (posted March 9, 2018)

We are pleased to announce that the special issue of The Qualitative Report on the future of qualitative data analysis is out!

These papers were first presented at the KWALON conference in Rotterdam in August of 2016, and we are very pleased that we are able to share them in this open access format. Many thanks to Ron Chenail and the team at TQR, as well as our reviewers.

DTQR special issue available! (posted October 15, 2017)

The special issue of Qualitative Inquiry based on papers from the 2015 ICQI conference are now available. Here is the abstract of our introduction, Digital Tools for Qualitative Research: Disruptions and Entanglements:

In this introduction to the special issue on digital tools for qualitative research, we focus on the intersection of new technologies and methods of inquiry, particularly as this pertains to educating the next generation of scholars. Selected papers from the 2015 International Congress of Qualitative Inquiry special strand on digital tools for qualitative research are brought together here to explore, among other things, blogging as a tool for meaning-making, social media as a data source, data analysis software for engaging in postmodern pastiche and for supporting complex teams, cell phone application design to optimize data collection, and lessons from interactive digital art that pertain to the use of digital tools in qualitative research. This collection disrupts common conceptions (and persistent misconceptions) about the relationship between digital tools and qualitative research and illustrates the entanglements that occur whenever humans intersect with the nonhuman, the human-made, or other humans.

Thank you to all of the authors for their hard work on these papers! They include Jessica MacLaren, Lorena Georgiadou, Jan Bradford, Caitlin Byrne, Amana Marie LeBlanc, Jaewoo Do, Lisa Yamagata-Lynch, Karla Eisen, Cynthia Robins, Judy Davidson, Shanna Rose Thompson, Andrew Harris and Kristi Jackson.

Report on ICQI 2017 (posted June 7, 2017)

Many thanks to everyone who helped make ICQI 2017 a success! We had another full two days of digital tools sessions, along with several pre-conference workshops. Stay tuned for a full report from Kristi Jackson, SIG chair, and in the meantime here are some photos from the event.

*We are especially happy to welcome the following folks as part of the organizing team for ICQI 2018: Caitlin Byrne, David Woods, Daniel Turner, Liz Cooper, Christian Schmeider, Leslie Porreau. and Maureen O’Neill. Thank you all!

**Proposals are generally due at the end of November for the following May conference– join us next year, and don’t forget to tag your submission as part of the Digital Tools for Qualitative Research special interest group!

See you at ICQI! (posted April 20, 2017)

The final program is out, and you can find all of our Digital Tools SIG sessions!

Here are some important SIG events – hope to see you there!

Thursday, May 18

- 7:30pm: Digital Tools for Qualitative Research social!

- Joe’s Brewery, 706 5th Street (a 10-minute walk from the Illini Union)

- The first drink is on us (while supplies last!), thanks to our generous sponsors: Atlas.ti, Dedoose, MAXQDA (Verbi), NVivo (QSR), QDAMiner (Provalis), Qualitative Data Repository, Queri, Quirkos, and Transana.

Friday, May 19

- 11am – 12:20pm: Digital Tools for Qualitative Research Plenary

- Join us in updating the Wikipedia entries related to qualitative research: A Hands-on Experience. You are invited to learn more about Wikipedia and how you, as a qualitative researcher, could participate in the generation, editing, and critique of the information available to the larger world about qualitative inquiry.

- 205 Architecture (a room just across the hall from the Ricker Library of Architecture and Art).

- We strongly urge all who are coming to the Wikihack to bring their own computers, if possible.

- Join us in updating the Wikipedia entries related to qualitative research: A Hands-on Experience. You are invited to learn more about Wikipedia and how you, as a qualitative researcher, could participate in the generation, editing, and critique of the information available to the larger world about qualitative inquiry.

Saturday, May 20

- 11am -12:20pm: Digital Tools for Qualitative Research SIG meeting: We will elect officers, review our budget, collect feedback and plan for next year.

- There will be time in the agenda for brief announcements and a table for the distribution of materials for attendees (feel free to bring announcements and/or handouts)

To Heck with Heidegger? Using QDAS for Phenomenology (posted March 8, 2017)

Heidegger warned that the use of technology is dehumanizing, and prominent phenomenological methodologists like van Manen (2014) have claimed that QDAS packages “are not the ways of doing phenomenology” (p. 319). Those involved in the development of QDAS, on the other hand, sometimes regard hesitance to use digital tools in qualitative research as due to misconceptions or lack of experience. In this post I stake out a middle ground with advice for phenomenologists wary of technology but eager to take advantage of its strengths. This post is based a recent article in Forum: Social Qualitative Research (Sohn, 2017).

By Brian Kelleher Sohn

Brian is a recent graduate of the Learning Environments and Educational Studies Ph.D. program at The University of Tennessee, Knoxville. He is a phenomenologist in the field of education. He is currently working on a book about phenomenological pedagogy in higher education.

Max van Manen (2014), a primary source for many human sciences phenomenologists, claims that qualitative data analysis software (QDAS) is not an appropriate tool for phenomenological research. He claims that using “special software” may facilitate thematic analysis in such genres as grounded theory or ethnography, “but these are not the ways of doing phenomenology” (p. 319). He goes on to say that coding, abstracting, and generalization cannot produce “phenomenological insights” (p. 319). The appropriate tools, however, are absent from his and other phenomenological methodologists’ instructions. Must we use pencil and paper? Record interviews in wax? Write our findings with a typewriter?

Phenomenologists’ concerns about technology and its effects on the researcher are well articulated (if extreme) in Goble, Austin, Larsen, Kreitzer, and Brintnell (2012). Framing their concern’s with McLuhan’s (2003) “medium is the message” (p. 23) and Heidegger’s (2008) views on technology as dehumanizing, they argue that “through our use of technology we become functions of it” (§1). If I have a hammer, I am likely to become one-that-hammers, in the hyphen-prolific wording of students of Heidegger. The right tool for the job, as we say, makes it easy. But ease, in some sense, is the problem for Goble et al. We all know there’s a difference between writing a letter and writing an email: so what might be lost when we use QDAS instead of (more) manual methods?

“Nothing, you luddites!” QDAS users and developers might respond. Every now and then I notice unbridled enthusiasm in the pro-QDAS camp almost as uncritical as what van Manen and Goble et al. write about technology. Davidson and di Gregorio (2011) argued that the processes of qualitative research, no matter the genre, involve disaggregation and recontextualization of data. Any difference between the genres is attributed to “residue” (p. 639) from battles for legitimacy rather than from genuine concerns or criticisms. Time to get with the times!

I wasn’t sure about getting with the times, but I knew I needed a structure to support my phenomenological dissertation study (Sohn, 2016). Below I’ll share some of the strategies I used to reconcile my concerns with technology and my desire to finish my research and graduate.

As a well-mentored phenomenologist, I had participated tangentially in dozens of research studies (e.g., Bower, Lewis, Wright, & Kavanagh, 2016; Franklin, 2013; Dellard, 2013; Worley & Hall, 2012) as part of an interdisciplinary phenomenology research group. In this group we developed research questions, conducted and analyzed bracketing interviews, read and analyzed interview transcripts, and critiqued each other’s preliminary findings. For the most part, the primary investigator in these studies did not use QDAS. It is through this group that I developed an appreciation for face-to-face interaction to conduct phenomenological analysis. I worked with this group for my dissertation, but unlike many of my research group colleagues, I also used the QDAS program MAXQDA. In the space I have here, I’ll talk about three major areas of concern for phenomenologists and what I did to address them.

Dehumanization

Seeing words on a screen is not the same as hearing them in an interview or other form of data, like an audio recording of a class session. For Goble et al. (2012), they felt their study participants were turned into zeros and ones when their transcripts were uploaded to QDAS, a Matrix-type nightmare for those who wish to maintain the embodied and cognitive aspects of their work. My solution to this problem was to go back to the audio recordings. I had to listen to them multiple times in auditing transcripts and to identify the relevant passages to my second-order analysis of the data. This immersion helped me see the participants’ data as living and breathing (sometimes kind of heavily). MAXQDA and other packages allow synchronization of the audio-recordings with the transcript so that you can easily listen while reading and analyzing.

Becoming a Tool

Dehumanization can run two directions—towards the participants and back at the researcher. Goble et al. (2012) worry that research studies, rather than opportunities for wonder and discovery, become problems to be solved with QDAS. Instead of artists, we can become functionaries of a capitalist-driven university system that demands results (and publications). This problem is much bigger than QDAS, but when using QDAS, one may feel the pressures of efficiency interfere with quality analysis and writing. For me, when I went to write my results chapter, I thought, “Oh, boy! All the hard work of coding and conceptualizing and memoing and logbooking in MAXQDA will pay off now!” So I started copying and pasting my chapter into existence. I soon found myself with writer’s block, uninspired despite looming deadlines. After realizing I was on autopilot, I returned to some motivational readings from Merleau-Ponty and was able to get back on track. He describes the goal of phenomenological writing as “establishing [the phenomenon] in the writer or the reader as a new sense organ, opening a new field or a new dimension to our experience” (Merleau-Ponty, 1968 [1964], p.182). I had to go back to MAXQDA supporting my writing, rather than driving it.

Bracketing

Maintaining wonder—challenging what is known through a careful examination of bias and positionality—is a key component of the phenomenological attitude. QDAS programs facilitate the ability to find literal similarities across documents, and the speed and efficiency may lead to superficial connections between study participants. A faint echo can by magnified when the coded segments pile up. I had to be diligent in my bracketing efforts, both inside and outside MAXQDA. Every four to six weeks I took printed transcripts to the research group for discussion, insight, confirmation, and contestation. In these sessions other members had the opportunity to help me examine what I thought I knew about my study at a granular and broad level. These times I spent outside of MAXQDA were also opportunities for distance from my data. We need immersion, we need to dwell in the words of participants, but without stepping back, it is difficult to successfully engage in the abductive thinking required for high-quality phenomenological insights.

As Shuhmann (2011) says, the QDAS user-interface “adds a layer of interpretation to qualitative analysis as one has to know how to ‘read’ a software package” (§2). This additional layer, the interface between user and QDAS platform, is where the following recommendations may best serve researchers (and more recommendations can be found in Sohn, 2017). In my case, I used MAXQDA to code and immerse myself in my study without feeling the participants had been atomized into cyborgs. I used memo and logbook features without turning my thoughts into restrictive categories. When I did feel as if an over-reliance on MAXQDA was hurting my study, I returned to the research group and reread phenomenological writings to reignite my motivation for producing the report. In order to maintain the phenomenological attitude while using QDAS, I recommend the following: keep your feet inside and outside the study and be diligent and exhaustive in bracketing.

References

Bower, K., Burnette, T., Lewis, D., Wright, C., & Kavanagh, K. (2016). “I Had One Job and That Was To Make Milk” Mothers’ Experiences Expressing Milk for Their Very-Low-Birth-Weight Infants. Journal of Human Lactation, 33(1), 188–194 DOI: 10.1177/0890334416679382

Davidson, J. & di Gregorio, S. (2011). Qualitative research and technology: In the midst of a revolution. In N. Denzin & Y. Lincoln (Eds.), Handbook of qualitative research (4th ed., (pp. 627-643). London: Sage.

Dellard, T. J. (2013). Pre-service teachers’ perceptions and experiences of family engagement: A phenomenological investigation. (Doctoral dissertation, University of Tennessee, Knoxville). Retrieved from http://trace.tennessee.edu/utk_graddiss/2416/

Franklin, K. A. (2013) Conversations with a phenomenologist: A phenomenologically oriented case study of instructional planning (Doctoral dissertation, University of Tennessee, Knoxville). Retrieved from http://trace.tennessee.edu/utk_graddiss/1721/

Goble, E.; Austin, W.; Larsen, D.; Kreitzer, L. & Brintnell, S. (2012). Habits of mind and the split-mind effect: When computer-assisted qualitative data analysis software is used in phenomenological research. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 13(2), Art. 2.

Heidegger, M. (2008 [1977]). The question concerning technology. In D. Farrell (Ed.), Basic writings (pp. 311-341). New York: Harper Perennial.

McLuhan, M. (2003 [1964]). Understanding media: The extensions of man. Toronto, ON: McGraw Hill.

Merleau-Ponty, M. (1968 [1964]). The visible and the invisible (ed. by C. Lefort, transl. by A. Lingis). Evanston, IL: Northwestern University Press.

Schuhmann, C. (2011). Comment: Computer technology and qualitative research: A rendezvous between exactness and ambiguity. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 12(1).

Sohn, B. (2016). The student experience of other students. Doctoral dissertation, University of Tennessee, Knoxville, USA.

Sohn, B. (2017). Phenomenology and Qualitative Data Analysis Software (QDAS): A Careful Reconciliation. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 18(1), Art. 14, http://nbn-resolving.de/urn:nbn:de:0114-fqs1701142.

van Manen, Max (2014). Phenomenology of practice: Meaning-giving methods in phenomenological research and writing. Walnut Creek, CA: Left Coast Press.

Worley, J., & Hall, J. M. (2012). Doctor shopping: A concept analysis. Research and Theory for Nursing Practice, 26(4), 262-278.

Using spreadsheets as a qualitative analysis tool (posted November 28, 2016)

Don’t have access to qualitative data analysis software? This week’s blog post provides some tips on how to prepare your transcripts to work within Microsoft Excel or Google Sheets.

By Dustin De Felice and Valerie Janesick

Are you in the middle of a research project? Or are you just starting and thinking about the possible tools you may need during analysis? Given the variety of choices out there, we discuss one choice not often thought of in qualitative research: spreadsheets. While there are a number of spreadsheet programs or Apps available, we focus on the steps needed to prepare your files for these two: MS Excel and Google Sheets. Either of these spreadsheets are good options, though each one has its own advantages (view our handout for a visual overview). In De Felice and Janesick (2015), we outline steps to take with Excel. For this discussion, we focus on preparing your transcripts so that they are ready to be imported into a spreadsheet. We recommend starting with the technique described by Meyer and Avery (2009). They provide an effective and simple technique for importing transcription files into Excel (version 2003). They recommend using Excel because it handles large amounts of data (numeric and text). It provides the researcher with ways of adding multiple attributes during the analysis process. Lastly, it allows for a variety of display techniques that include various ways of filtering, organizing and sorting.

In De Felice and Janesick, (2015), we recommend using Excel because the format mirrors many phenomenological methods (Giorgi, 2009; Moustakas, 1994). In addition, spreadsheet programs are powerful, yet not as overwhelming as other data analysis tools. In De Felice and Janesick (forthcoming), we extend our recommendation beyond just the use of Excel because there are a number of features available when utilizing Google Sheets. One benefit of Sheets is that it is widely available and most features are free. It allows for collaborative opportunities (including same time/same sheet editing). This feature is available within Excel, but there are a number of barriers that can limit this functionality (e.g. cost, different versions, etc.). In general, we recommend either spreadsheet for most projects, though there are some compelling reasons to use one over the other.

In terms of design, we recommend asking yourself this question before transcribing: “What unit do you have in mind when you think about your analysis?” (and Mayer & Avery, 2009). The answer to this question can help you decide how to best use either program. For example, will you use the turns in a conversation as a unit of measure, or will you use the phrase or sentence level as a unit? Of course, there are other ways to analyze the data, so we recommend starting with Meyer and Avery and their discussion on the “codable unit” (2009, p. 95-96). Before describing some of our steps, we would like to note that these steps are most effective for a researcher conducting and transcribing interviews (from audio).

Once you have identified that codable unit, you need to establish some conventions within your transcriptions to ensure your work will import. In Excel and Sheets, you can use these as separator characters: Tabs, Commas or Custom (essentially any single symbol including a hard tab as shown in figure 1). These separator characters will dictate how your text will fit into the cells and across the rows and down the columns. While there are ways of changing these separator characters, it is best to establish a convention within your transcriptions and stick with it throughout your research project.

Figure 1: Screenshot of the options available for importing a file into Google Sheets. (see image in sidebar)

This import step is necessary because spreadsheets are not the easiest tools to use when transcribing. Instead we recommend using a word processor to create your transcripts in a text (.txt) format. In figure 2, we provide a screenshot from within a word processor. In the background of the screenshot, we have an example transcription that used a turn in conversation as the codable unit and the colon as a separator character.

Figure 2: Screenshot of Google docs file that we downloaded as plain text (.txt). (see image in sidebar)

We have used different word processors and our preference for working with transcriptions is to use MS Word. We provide detailed steps in De Felice and Janesick (2015) on p. 1585. Our main reason for this choice is that there is no way to show formatting marks in Google Docs (see Figure 3 for a list of formatting marks available in MS Word. These features are not visible in Google Docs). These formatting marks are essential in properly preparing the transcription for importation. There is hope for an eventual add-on to correct this oversight in Google Docs, but there is currently no workaround.

Figure 3: Screenshot of formatting marks within MS Word. (see image in sidebar)

Once your text file is ready for import, you can use the spreadsheet as an analysis tool. For phenomenological studies, we outline a few ways of using Excel in this capacity in De Felice and Janesick (2015). However, we recommend throughout your research project that you keep in mind this fantastic advice from Meyer and Avery: “All research projects (and researchers) are not the same. What works for one project may not be best for another.” (2009, p. 92) We offer the same advice for our suggestions here.

References

De Felice, D., & Janesick, V. J. (2015). Understanding the marriage of technology and phenomenological research: From design to analysis. The Qualitative Report, 20(10), 1576-1593. Retrieved from http://nsuworks.nova.edu/tqr/vol20/iss10/3

Giorgi, A. (2009). The descriptive phenomenological method in psychology. A modified Husserlian approach. Pittsburgh, PA: Duquesne University Press.

Meyer, D. Z., & Avery, L. M. (2009). Excel as a qualitative data analysis tool. Field Methods, 21, 91-112. DOI: 10.1177/1525822X08323985

Moustakas, C. (1994). Phenomenological research methods. Thousand Oaks, CA: Sage.

Five-Level QDA (posted November 15, 2016)

As we look ahead to ICQI 2017, we are sharing a few more reflections on this year’s conference. Here, Christina Silver and Nicholas Woolf describe their work on Five-Level QDA.

Christina Silver and Nicholas H Woolf, Five-Level QDA

In the Digital Tools Stream at ICQI 2016 we presented two papers discussing our work to develop and implement the Five-Level QDASM Method, a CAQDAS pedagogy that transcends methodologies, software programs and teaching modes. The first, called “Operationalizing our responsibilities: equipping universities to embed CAQDAS into curricular” was presented by Christina in the opening plenary session. The second, called “Five-level QDA: A pedagogy for improving analysis quality when using CAQDAS” was presented jointly by Nicholas and Christina. Here we briefly summarize these two papers. You can find out more about the Five-Level QDA method and our current work by visiting our website.

Responsibilities for effective CAQDAS teaching in the digital age

There is an expanding range of digital tools to support the entire process of undertaking qualitative and mixed methods research, and current generations of students expect to use them (Paulus et al., 2014), whatever their disciplinary, methodological or analytic context. Although many researchers use general-purpose programs to accomplish some or all of their analysis, we focus on dedicated Computer Assisted Qualitative Data AnalysiS (CAQDAS) packages. CAQDAS packages are now widely used and research illustrates that uptake continues to increase (White et al. 2012; Gibbs, 2014; Woods et al. 2015). However, there’s little evidence that their use is widely embedded into university curricula. There may be several reasons for this (Gibbs, 2014), including the difficulty of attending to diverse learner needs, which are affected by learners’ methodological awareness, analytic adeptness and technological proficiency (Silver & Rivers, 2015).

These issues highlight the importance of developing effective ways of embedding CAQDAS teaching into university curricula. This long-standing issue has been debated for as long as these programs have been available, and it is widely agreed that the appropriate use of digital technologies must be taught in the context of methodology (Davidson & Jacobs, 2008; Johnston, 2006; Kuckartz, 2012; Richards, 2002; Richards & Richards, 1994; Silver & Rivers, 2015; Silver & Woolf, 2015). However, few published journal articles illustrate teaching of CAQDAS packages as part of an integrated methods courses (recent exceptions are Paulus & Bennett, 2015; Bourque & Bourdon, 2016 and Leitch et al., 2016). Several reflective discussions regarding the integration of methodological, analytical and technological teaching are insightful and useful (e.g., Carvajal, 2002; Walsh, 2003; Davidson & Jacobs, 2008; di Gregorio & Davidson, 2008), as are concrete examples of course content, modes of delivery, course assignments and evaluation methods (e.g., Este et al., 1998; Blank, 2004; Kaczynski & Kelly, 2004; Davidson et al., 2008; Onwuegbuzie et al., 2012; Leitch et al., 2015; Paulus & Bennett, 2015; Bourque & Bourdon, 2016; Jackson, 2003). However, these writers provide varying degrees of detail about instructional design and are contextually specific, focusing on the use of a particular CAQDAS program, a disciplinary domain, and/or a particular analytic framework. Their transferability and pedagogical value may therefore be limited where there is an intention to use different methodologies, analytic techniques and software programs.

There are clearly challenges and lack of guidance in the literature for teaching qualitative methodology, analytic technique and technology concurrently. Although the challenges are real they need not be seen as barriers. A pedagogy that transcends methodologies, analytic techniques, software packages and teaching modes could prompt a step-change in the way qualitative research in the digital environment is taught (Silver & Woolf, 2015). The Five-Level QDA method is designed as such a pedagogy with the explicit goal of addressing these challenges.

The Five-Level QDA Method: a CAQDAS pedagogy that spans methodologies, software packages and teaching modes

The Five-Level QDA method (Woolf, 2014; Silver & Woolf, 2015; Woolf & Silver, in press) is a pedagogy for learning to harness CAQDAS packages powerfully. The phrase “harness CAQDAS packages powerfully” isn’t just a fancy way of saying “use CAQDAS packages well”, but means using the chosen software from the start to the end of a project, while remaining true throughout to the iterative and emergent spirit of qualitative and mixed methods research. It isn’t a new or different way of undertaking analysis, but explicates the unconscious practices of expert CAQDAS users, developed from our experience of using, teaching, observing and researching these software programs over the past two decades. It involves a different way of harnessing computer software from a taken-for-granted or common sense approach of simply observing the features on a computer screen and looking for ways of using them.

The principles behind the Five-Level QDA Method

The core principle is the need to distinguish analytic strategies – what we plan to do – from software tactics – how we plan to do it. As uncontroversial as this sounds, strategies and tactics in everyday language are commonly treated as synonyms or near-synonyms, leading unconsciously to the methods of a QDA and the use of the CAQDAS package’s features being considered together as a single process of what we plan to do and how we plan to do it. A consequence of this is that the features of the software to either a small or a large degree drive the analytic process.

The next principle is recognizing the contradiction between the nature of CAQDAS which is to varying degrees iterative and emergent, and the predetermined steps or cut-and-dried nature of computer software. When this is not consciously recognized, either the strategy is privileged, with the consequence that the software is not used to its full potential throughout a project; or the tactics are privileged, with the consequence that the iterative and emergent aspects of a QDA are suppressed to some degree. However, when the contradiction is consciously recognized it becomes necessary to reconcile it in some way. One approach is through a compromise, or trade-off, in which the analytic tasks of a project are raised to a more general level and expressed as a generic model of data analysis in order to more easily match the observed features of CAQDAS packages (terms in italics have a specific meaning in Five-Level QDA).

The Five-Level QDA method, following Luttwak’s (2001) five level model of military strategy, takes a different approach to reconciling the contradiction between strategies and tactics by placing it in a larger context in order to transcend the contradiction. Regardless of research design and methodology, there are two levels of strategy – the project’s methodology and objectives (Level 1), and the analytic plan (Level 2) that arises from those objectives. There are similarly two levels of tactics – the straightforward use of software tools (Level 4) and the sophisticated use of tools (Level 5). We use the term tools in a particular way. We are not referring to software features, but ways of acting on software components – things in the software that can be acted upon. Whereas CAQDAS packages have hundreds of features, they have far fewer components, typically around 15-20.

The critical middle level between the strategies and tactics is the process of translation (Level 3).Rather than raise the level of analytic tasks to the level of software features, the level of analytic tasks is lowered to the level of its units, which are then matched, or translated, to the components of the CAQDAS package. This method ensures that the direction of action of the process is always initiated in a single direction: from analytic strategies to software tactics, and never the other way around. This ensures that the analytic strategies drive the analytic process, not the available features of the software. Because translation operates at the level of individual analytic tasks the method is relevant across methodologies and software programs.

Figure 1 provides an overview of the Five-Level QDA Method (see image in sidebar)

Several people at our presentations fed-back to us that this chart implies the process is linear and hierarchical, which of course is misleading because all forms of QDA are to varying degrees iterative and emergent. Since ICQI, therefore, we have created a diagram that we hope more accurately reflects the iterative, cyclical nature of the process (Figure 2, see image in side bar).

Figure 2 - Five-Level QDA diagram (see image in sidebar)

Tools for teaching the Five-Level QDA Method

In order to illustrate translation in the context of learners’ own projects we have developed two displays: Analytic Overviews (AOs) and Analytic Planning Worksheets (APWs). AOs provide a framework for the development of a whole project, described at strategies Levels 1 and 2, whereas APWs scaffold the process of translation, facilitating the skill of matching the units of analytic tasks to software components in order to work out whether a software tool can be selected, or needs to be constructed. We don’t have space here to illustrate translation in detail, but further details about teaching the Five-Level QDA method via the use of AOs and APWs are discussed in Silver & Woolf (2015) and Woolf & Silver (in press).

Implementing and researching the Five-Level QDASM Method

The Five-Level QDA method provides an adaptable method for teaching and learning CAQDAS. Since 2013 we’ve been using it in our own research and teaching in many different contexts, and we’ve used feedback from our students and peers to refine the AOs and APWs. We’re also and working with several universities to improve provision of CAQDAS teaching, using the Five-Level QDA method in different learning contexts.

We believe that the Five-Level QDA method is adaptable to a range of instructional designs including different face-to-face workshop designs and remote learning and via textbook and complimentary video-tutorials (Woolf & Silver, in press). This is because it provides a framework through which qualitative and mixed methods research and analysis can be taught at the strategies level within the context of digital environments. Instructional designs that teach qualitative methodology and analytic techniques via the use of CAQDAS packages illustrate that qualitative methodology and technology need not be introduced to students separately (e.g. Davidson et al, 2008, Bourque 2016, Leitch 2016). The Five-Level QDA method pre-supposes that it is not possible to adequately teach technology without methodology, but we would also argue that in our increasingly digital environment it is increasingly less acceptable to adequately teach methodology without technology. The intentionally separate emphasis given to analytic strategies and software tactics within a single framework enables the teaching of methodology and technology concurrently within an instructional design that is adaptable to local contexts, as well as serving as a method to harness CAQDAS for researchers’ own projects.

We are currently evaluating our work and welcome opportunities to work with others to implement and further research the effectiveness of the Five-Level QDA method in different contexts.

References

Blank, G. (2004). Teaching Qualitative Data Analysis to Graduate Students. Social Science Computer Review, 22(2), 187–196. http://doi.org/10.1177/0894439303262559

Bourque, C. J., & Bourdon, S. (2016). Multidisciplinary graduate training in social research methodology and computer-assisted qualitative data analysis: a hands-on/hands-off course design. Journal of Further and Higher Education, 9486(April), 1–17. http://doi.org/10.1080/0309877X.2015.1135882

Carvajal, D. (2002). The Artisan ’ s Tools . Critical Issues When Teaching and Learning CAQDAS. Forum Qualitative Sozialforschung / Forum: Qualitative Social Research, 3(2, Art 14).

Davidson, J. (n.d.). Thinking as a Teacher : Fully Integrating NVivo into a Qualitative Research Class.

Davidson, J., & Jacobs, C. (2008). The Implications of Qualitative Research Software for Doctoral Work. Qualitative Research Journal, 8(2), 72–80. http://doi.org/10.3316/QRJ0802072

Davidson, J., Jacobs, C., Siccama, C., Donohoe, K., Hardy Gallagher, S., & Robertson, S. (2008). Teaching Qualitative Data Analysis Software ( QDAS ) in a Virtual Environment : Team Curriculum Development of an NVivo Training Workshop. In Fourth International Congress on Qualitative Inquiry (pp. 1–14).

Este, D., Sieppert, J., & Barsky, A. (1998). Teaching and Learning Qualitative Research With and Without Qualitative Data Analysis Software. Journal of Research on Computing in Education, 31(2), 17.

http://doi.org/10.1080/08886504.1998.10782247

Gibbs, G. R. (2014). Count: Developing STEM skills in qualitative research methods teaching and learning. Retrieved from https://www.heacademy.ac.uk/sites/default/files/resources/Huddersfield_Final.pdf

Jackson, K. (2003). Blending technology and methodology. A shift towards creative instruction of qualitative methods with NVivo. Qualitative Research Journal, 3(Special Issue), 15.

Johnston, L. (2006). Software and Method: Reflections on Teaching and Using QSR NVivo in Doctoral Research. International Journal of Social Research Methodology, 9(5), 379–391. http://doi.org/10.1080/13645570600659433

Kaczynski, D., & Kelly, M. (2004). Curriculum Development for Teaching Qualitative Data Analysis ONline. QualIT 2004: International Conference on Qualitative Research in IT and IT in Qualitative Research, (November), 9.

Leitch, J., Oktay, J., & Meehan, B. (2015). A dual instructional model for computer-assisted qualitative data analysis software integrating faculty member and specialized instructor: Implementation, reflections, and recommendations. Qualitative Social Work. http://doi.org/10.1177/1473325015618773

Onwuegbuzie, A. J., Leech, N. L., Slate, J. R., Stark, M., Sharma, B., Frels, R., … Combs, J. P. (2012). An exemplar for teaching and learning qualitative research. The Qualitative Rport, 17(1), 646–647. Retrieved from http://www.nova.edu/ssss/QR/QR17-1/onwuegbuzie.pdf

Paulus, T. M., & Bennett, A. M. (2015). “I have a love–hate relationship with ATLAS.ti”TM: integrating qualitative data analysis software into a graduate research methods course. International Journal of Research & Method in Education, (June), 1–17. http://doi.org/10.1080/1743727X.2015.1056137

Silver, C., & Rivers, C. (2015). The CAQDAS Postgraduate Learning Model: an interplay between methodological awareness, analytic adeptness and technological proficiency. International Journal of Social Research Methodology, 5579(September), 1–17. http://doi.org/10.1080/13645579.2015.1061816

Silver, C., & Woolf, N. H. (2015). From guided-instruction to facilitation of learning : the development of Five-level QDA as a CAQDAS pedagogy that explicates the practices of expert users. International Journal of Social Research Methodology, (July 2015), 1–17. http://doi.org/10.1080/13645579.2015.1062626

Walsh, M. (2003). Teaching Qualitative Analysis Using QSR NVivo 1. The Qualitative Report, 8(2), 251–256. Retrieved from http://www.nova.edu/ssss/QR/QR8-2/walsh.pdf

White, M. J., Judd, M. D., & Poliandri, S. (2012). Illumination with a Dim Bulb? What do social scientists learn by employing qualitative data analysis software (QDAS) in the service of multi-method designs? Sociological Methodology, 42(1), 43.–76. http://doi.org/10.1177/0081175012461233

Woods, M., Paulus, T., Atkins, D. P., & Macklin, R. (2015). Advancing Qualitative Research Using Qualitative Data Analysis Software (QDAS)? Reviewing Potential Versus Practice in Published Studies using ATLAS.ti and NVivo, 1994–2013. Social Science Computer Review, 0894439315596311. http://doi.org/10.1177/0894439315596311

Woolf, N. H., & Silver, C. (in press). Qualitative analysis using ATLAS.ti: The Five-Level QDA Method. London: Routledge.

Woolf, N. H., & Silver, C. (in press). Qualitative analysis using MAXQDA: The Five-Level QDA Method.London: Routledge.

Woolf, N. H., & Silver, C. (in press). Qualitative analysis using NVivo: The Five-Level QDA Method. London: Routledge.

Transparency, QDAS, and Complex Qualitative Research Teams (posted November 3, 2016)

by Judith Davidson

Judith Davidson is an Associate Professor in the Research Methods and Program Evaluation Ph.D. Program of the Graduate School of Education, University of Massachusetts Lowell. She is a founding member of the ICQI Digital Tools Special Interest Group. She is currently working on a book about complex qualitative research teams, which she is writing in NVivo!

Qualitative researchers increasingly find themselves working as members of a complex research team. Multiple members, multiple disciplines, geographically dispersed—these are just some of the forms of diversity that we face in our research endeavors. Many of these research teams employ Qualitative Data Analysis Software (QDAS).

While there are many reasons to use QDAS in complex team research, the one I wish to talk about here is support of transparency: making clear how results were reached or showing proof of the process of interpretation that indicates the conclusions are believable. Transparency has long been held up as a virtuous and important notion in qualitative research, but as with many things in qualitative research, many of our descriptions relate to individually conducted research, not team-based research projects. Moreover, our considerations of transparency have not yet made much sense of qualitative research conducted with QDAS.

As part of the 2016 ICQI Digital Tools strand and a panel examining issues related to QDAS use with complex teams, I presented a paper titled “Qualitative Data Analysis Software Practices in Complex Qualitative Research Teams: Troubling the Assumptions about Transparency (and Portability)” (Davidson, Thompson, and Harris, under review) that sought to get at some of the issues that arise at the nexus of complex teams, qualitative research, QDAS, and transparency.

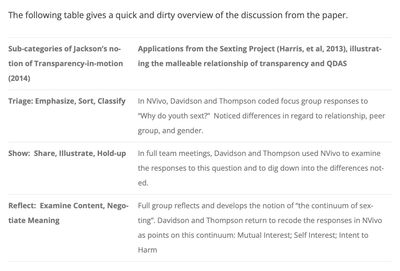

Our paper applied Jackson’s notion of transparency-in-motion (Jackson, 2014) to the methodological process of a complex team project in which we had been engaged, Building a Prevention Framework to Address Teen “Sexting” Behaviors, or the ‘Sexting Project’ (Harris et al; Davidson, 2014). Jackson’s ideas were derived from a study of ‘lone ranger’ researchers, doctoral students using QDAS in their own, individual research work. In contrast, the goal of our paper was to demonstrate how Jackson’s descriptive categories of transparency-in-motion (triage, show, and reflect) are enacted by real teams working with real world restrictions that teams often face in trying to use QDAS. In the article, we follow the development of one finding from the Sexting Project, the continuum of sexting, to show how QDAS use wove in and out of the stages of triage, show, and reflect as this term evolved for the research team (Davidson, 2011).

The Sexting Study was conducted by a multi-disciplinary team located at three institutions of higher education in three regions of the United States. Focus group data about views of teen sexting was collected from three separate audiences at these locations; teens, teen caregivers, and those who worked with and for teens. It was one of the first qualitative research studies conducted on the topic of sexting. All data collected for the study was organized in an NVivo database maintained by the lead site.

The table (in the sidebar) gives a quick and dirty overview of the discussion from the paper.

Sub-categories of Jackson’s notion of Transparency-in-motion (2014)

Applications from the Sexting Project (Harris, et al, 2013), illustrating the malleable relationship of transparency and QDAS

Triage: Emphasize, Sort, Classify

In NVivo, Davidson and Thompson coded focus group responses to “Why do youth sext?” Noticed differences in regard to relationship, peer group, and gender.

Show: Share, Illustrate, Hold-up

In full team meetings, Davidson and Thompson used NVivo to examine the responses to this question and to dig down into the differences noted.

Reflect: Examine Content, Negotiate Meaning

Full group reflects and develops the notion of “the continuum of sexting”. Davidson and Thompson return to recode the responses in NVivo as points on this continuum: Mutual Interest; Self Interest; Intent to Harm

Analysis of our process demonstrated that NVivo fulfilled possibilities for triage-in-motion (triage, show, and reflect) through deep individual analysis with the tool and broader episodic analysis with the full research team. Despite differential access to NVivo by team members (only lead team had a site license), the tool was able to offer all researchers better opportunities for working with the data and visualizing relationships within the data. Despite these restrictive circumstances, the QDAS tool could play this role, because there was senior leadership knowledgeable and experienced in the use of the tool who could support full group opportunities to use and think with it.

These findings indicate Jackson’s notion of transparency-in-motion has relevance to both individual researchers and research teams. Using the key criteria of triage, show, and reflect, researchers were able to manage the use of QDAS as they continuously worked toward transparency-in-motion under less than ideal conditions. Discussions of transparency have long been prominent in qualitative research, but we suggest that in today’s post-modern/post-structural world transparency-in-motion may be a more useful perspective for qualitative researchers to adopt.

References

Davidson, J. (2014). Sexting: Gender and Teens. The Netherlands: Sense Publications.

Davidson, J., Thompson, S., Harris, A., (under review). Qualitative Data Analysis Software Practices in Complex Qualitative Research Teams: Troubling the Assumptions about Transparency (and Portability).

Harris, A., Davidson, J., Letourneau, E., Paternite, C., Miofshky, K.T. (September 2013. Building a Prevention Framework to Address Teen “Sexting” Behaviors. (189 pgs). Washington DC: U.S. Dept. of Justice Office of Juvenile Justice & Delinquency Prevention.

Jackson, K. (2014). Qualitative Data Analysis Software, Visualizations, and Transparency: Toward an Understanding of Transparency in Motion. Paper presented at the Computer Assisted Qualitative Data Analysis conference, May 3, 2014. Surrey, England.

Points of view or opinions in this document are those of the author and do not necessarily represent the official position or policies of the U.S. Department of Justice, which funded the project.

Call for papers: ICQI2017 (posted October 7, 2016)

Thank you to all of the presenters at the 2016 International Congress of Qualitative Inquiry (ICQI)! We welcomed 49 presenters to our Special Interest Group (SIG) on Digital Tools for Qualitative Research (DTQR), and thanks to our 14 sponsors, we gave away 30 books and software licenses during the raffles. We once again had a wonderful conference experience, and look forward to seeing many of you gain in 2017.

ICQI 2017

- Plan now to submit a paper proposal to our DTQR SIG for 2017. Submissions are due December 1 and guidelines are available on the ICQI website (http://icqi.org/).

See our prior mini-programs for ideas:

- If you are interested in helping us review proposal submissions, serving as a session chair, organizing the program, or planning any special events, please let us know.

- There will be several DTQR-related workshops in 2017 – offered by Sharlene Hesse-Biber, Kristi Jackson, Anne Kuckartz, Trena Paulus & Jessica Lester. Check them out! http://icqi.org/home/workshops/

- During our SIG meeting at ICQI 2017 we will propose a leadership structure and hold elections for officers. Positions will include SIG chair (currently Kristi Jackson), program chair (currently Judith Davidson) and outreach/marketing chair (currently Trena Paulus). Position descriptions will be posted on the Digital Tools website prior to the conference for your review and consideration and we will send a comprehensive message regarding this and other conference events a few weeks prior to ICQI 2017.

News and notes

- Our special issue of Qualitative Inquiry on DTQR, based on the 2015 conference papers, is now in press. We will announce its publication through our social media outlets (see below).

- Send us your news! What are you publishing? What other conferences are you attending? What workshops are you hosting? We are happy to share this with the community via our social media outlets.

- We have launched the DTQR blog series and welcome your contributions. Want to share what you’ve been working on, thinking about or theorizing? Contact daniel@quirkos.com with your idea.

- We enjoyed seeing many of you at the KWALON conference in Rotterdam in late August where we continued conversations about the future of QDA software.

- We would love updates from anyone who attended the Qualitative Methods and Research Technologies Research Summit in Cracow, September 1-3. Consider contacting daniel@quirkos.com with your idea for a blog post.

Upcoming events

- The Qualitative Report will be holding the annual conference in Fort Lauderdale, Florida: January 12-14, 2017. (http://tqr.nova.edu/tqr-8th-annual-conference/)

- We welcome the newly launched European Congress of Qualitative Inquiry to be held in Leuven, Belgium: February 7-10, 2017.

- Want us to promote your event? Let us know.

Follow us on social media:

- @Digital_Qual

- DigitalToolsforQualitativeResearch on Facebook

Teaching qualitative research online (posted September 15, 2016)

Teaching qualitative research online

In this age of digital tools, we are using digital tools not only to do our research, but to teach the next generation of scholars. In this month’s blog post, Kathryn Roulston and Kathleen deMarrais from the University of Georgia describe their foray into the world of teaching qualitative research online.

Kathryn Roulston and Kathleen deMarrais

“I hate online teaching!” That is a sentiment that we have heard expressed by some teachers in higher education. In addition to the workload involved in teaching online, many faculty members are skeptical about the technical support they will receive for learning how to teach online, as well as the outcomes of online education for their students. Although we too have experienced our own doubts at times, we have voluntarily developed online coursework for the Qualitative Research program at the University of Georgia. We have learned much from this journey into online education, and are happy with the outcomes for our students.

To begin, we started with courses that we had taught for many years in face-to-face contexts. Over a period of years, we developed the online course content as we taught hybrid versions of our courses that involved both face-to-face and online instruction. In 2014, we began to offer the core courses of our on-campus certificate in Interdisciplinary Qualitative Studies in a fully online format. Judith Preissle and Kathleen deMarrais’s notion of “qualitative pedagogy” informs our approach to online teaching. That is, we approach teaching in the same way we approach doing qualitative research: by being responsive, recursive, reflexive, reflective, and contextual.

We have attended to how students respond and engage with online coursework by conducting a research study (with Trena Paulus and Elizabeth Pope) to look at graduate students’ perceptions and experiences of online delivery of coursework. Over the past two years 18 students from four courses have been interviewed. We have also looked at naturally-occurring interaction that occurs during the course. Below we share some of what we have found to date.

Why do students choose online coursework?

Convenience, flexibility and accessibility. Students take online coursework because they perceive it to be convenient and accessible for commuters and distance students. On-campus students who are not commuters also choose to take online coursework because not having to attend face-to-face classes allows more flexibility in individual schedules. We have found that even students who have had poor prior experiences in online courses, or prefer to take coursework face-to-face will choose online coursework if it is thought be more convenient or accessible.

Learning through reading and writing. Some students prefer taking coursework online rather than face-to-face, because they appreciate the intensive reading and writing involved. Further, since students in our courses are required to post weekly, more reticent students are more visible than they might otherwise be in face-to-face contexts. Students are also able to revisit online resources, something that is appreciated by English language learners especially.

What are the challenges of learning online?

Managing the weekly schedule. To be successful in the online learning context, students must learn to manage the weekly schedule in new ways. Students in our coursework are expected to log in to the Learning Management System (LMS) several times a week, and if they do not do this will easily fall behind and feel overwhelmed.

Course design and organization. Understanding what is expected each week and locating relevant materials may be an initial challenge for students. This is aided when courses use a repetitive design in which content materials are organized in a standardized format with expectations for what is required each week clearly identified. Students are expected to access content in modules that are organized similarly, and post and respond to one another’s work at the same time each week. Although several of our students reported that they perceive this kind of routinization as somewhat tedious, it appears to help the majority of students to accomplish tasks successfully.

Mastering content. Students view learning about approaches to qualitative inquiry and how to design and conduct qualitative research on a topic of individual interest as challenging. Since courses we have taught are delivered asynchronously, students need to let instructors know when they are unclear about what is expected of them. Several students have reported that they are reluctant to ask questions of the instructor online – although multiple forums are provided for them to ask questions related to content, assignments, or technical issues. Students also have opportunities to meet with instructors in an online meeting room to discuss questions, although not all students take advantage of these meetings.

Interacting with others online. Students report that they want to engage and connect with the instructor and other students in authentic ways online. Some students perceive that classmates do not always post in authentic ways. In online learning contexts, misunderstandings can easily occur– so both instructors and students must learn how to communicate effectively in asynchronous formats. Most of all, students appreciate constructive feedback from their instructors and classmates, and several have told us that they have formed friendships with others that extended beyond the life of a course.

How do students assess the learning outcomes of qualitative coursework delivered online?

Overall, students assess the coursework that we have taught online positively and the learning outcomes as equivalent to coursework taught face-to-face. Students are able to meet their learning goals with respect to learning about methods used by qualitative researchers (e.g., interviewing; observing; document analysis; data analysis); how to design a qualitative study; the relationship of theory in doing research; assessment of quality; and writing up findings from qualitative studies.

What helps students to be successful in online learning contexts?

Students report that effective learning is facilitated by:

- Clear organization of course content;

- Use of a variety of multi-media resources, including screencasts and videos, audio-enhanced presentations, quizzes, course readings and so forth;

- Timely feedback from the instructor; and

- Course projects that contribute to individual research interests.

What have we learned about teaching qualitative research online?

Learning about our students’ perspectives has helped us to understand the value of:

- Modeling and encouraging students to be authentic in how they represent themselves online (e.g., posting personal photos and/or videos, organization of synchronous online meetings, and sharing of personal details about one’s off-line life).

- Providing specific guidance to students in being reflexive with respect to their learning preferences and management of their course schedule in order to develop self-directed learning skills.

- Structuring activities and assignments in ways that build upon one another across the course sequence.

- Providing clear instructions and content organization to assist students as they navigate the Learning Management System to locate materials and complete assigned tasks.

- Delivering reminders in multiple modes (e.g., email, videos, news items etc.) to assist students in managing the weekly schedule.

- Encouraging students to be reflective about how they learn and interact with others in the online environment.

- Attending to students as individuals, even as we also consider the wider context in which any particular course is being taught.

As teachers of qualitative inquiry, we have been challenged to adapt to changes in student populations with whom we work and innovate in how we teach. Learning to teach online has prompted us to think carefully about how we teach qualitative inquiry and what we expect students to know and be able to do as a result of engaging in our courses. We continue to be excited about learning to teach online and how we might engage students in digital spaces. We know that we still have much to learn about effective online teaching.

For more information on qualitative pedagogy, see:

Preissle, J., & deMarrais, K. (2011). Teaching qualitative resesearch responsively. In N. Denzin & M. D. Giardina (Eds.), Qualitative inquiry and global crisis (pp. 31-39). Walnut Creek, CA: Left Coast Press.

Preissle, J., & DeMarrais, K. (2015). Teaching reflexivity in qualitative research: Fostering a life style. In N. Denzin & M. D. Giardina (Eds.), Qualitative inquiry and the the politics of research (pp. 189-196). Walnut Creek, CA: Left Coast Press

For more information on the University of Georgia’s Online Graduate Certificate in Interdisciplinary Qualitative Studies, visit our website.

Speculating on the future of digital tools (posted August 18, 2016)

We are happy to announce the publication of an article in Qualitative Inquiry:

Speculating on the Future of Digital Tools for Qualitative Research

Judith Davidson, Trena Paulus, and Kristi Jackson

Development in digital tools in qualitative research over the past 20 years has been driven by the development of qualitative data analysis software (QDAS) and the Internet. This article highlights three critical issues for the future digital tools: (a) ethics and the challenges, (b) archiving of qualitative data, and (c) the preparation of qualitative researchers for an era of digital tools. Excited about the future and the possibilities of new mash-ups, we highlight the need for vibrant communities of practice where developers and researchers are supported in the creation and use of digital tools. We also emphasize the need to be able to mix and match across various digital barriers as we engage in research projects with diverse partners.

Launching the DTQR blog series (posted August 2, 2016)

One outcome of the community conversations at ICQI was the decision to launch a series of blog posts related to this year’s presentations. This month’s post is by Kristi Jackson of Queri, Inc. Let us know what you think!

DETERMINISM VS. CONSTRUCTIVISM: THE POLARIZING DISCOURSE REGARDING DIGITAL TOOLS FOR QUALITATIVE RESEARCH AND HOW IT THREATENS OUR SCHOLARSHIP

The Power Broker, written in 1974 by Robert Caro, won a Pulitzer Prize as a biography of one of the most prolific and polarizing urban planners in American history, Robert Moses. Known as the “master builder,” his racism and elitism were evident in much of his work. He publically resisted the move of black veterans into Stuyvesant Town, a Manhattan residential area created specifically to house World War II veterans. He also set his sights on the destruction of a playground in Central Park to replace it with a parking lot for the expensive Tavern-on-the-Green restaurant. Although he was never elected to any public office, he once held twelve titles simultaneously, including NYC parks Commissioner and Chairman of the Long Island State Park Commission (Caro, 1974).

In his piece, “Do artifacts have politics?” Landon Winner (1980) describes the 204 bridges over the parkways of Long Island that were built under the supervision of Moses. Some of these bridges had only nine feet of clearance. Based on a claim by a co-worker, Moses purposefully constructed the low bridges to prevent busses from traveling through the area’s parkways. This privileged white, upper-class owners of automobiles who could fit their vehicles under the bridges. It simultaneously restricted access for poorer people (often blacks) who tended to ride buses. This is one of Winner’s examples of the social determination of technology: That there are explicit and implicit political purposes in the histories of architecture and city planning.

Because I am here to talk about QDA Software and Digital Tools for Qualitative Research – industries with builders, owners, developers, consultants and trainers – you may be starting to see where I am going with this. While we QDA Software experts like to think we are building bridges – that is, building access – our critics sometimes insist that we are being obstructionist. Before coming back to my story of Robert Moses, let me define my two key terms. As a caveat, I am setting aside some of the diverse understandings and controversies around these terms.

- Determinism presumes that all events, including human actions and choices, are the results of particular causes. This approach to knowledge isolates elements and tends to define them as causes or results.

- Constructivism presumes that views of ourselves and our world are continually evolving according to internal processes of self-organization. These views are not the result of exposure to information or dedicated adherence to principles like Occam’s Razor. Rather, our views of ourselves and our world expand, contract, plateau and adjust from within.

From my insider perspective, experts in QDA Software tend to emphasize constructivist perspectives. Researchers carve out their own paths in the use of the software for a particular study. As Miles and Huberman (1994) argued, the flexible, recursive and iterative capabilities of software provide unprecedented opportunities to challenge researcher conceptualizations. Lyn and Tom Richards (1994) stated that as they began developing NUD*IST, their analysis entailed a constant interrogation of themes. They also stated that one of their primary goals was to allow for a diverse range of theories and methodologies to be applied, and for these to be adjustable over time.

From my vantage point, many critics who argue that QDA Software compromises the qualitative research process are invoking the language of determinism. The particular flavor is one of technological determinism. The most common critiques include the tendency for this genre of software to:

- Distance researchers from the data (Agar, 1991).

- Quantify the data (Welsh, 2002; Hinchliffe, Crang, Reimer & Hudson, 1997).

- Homogenize the research process (Barry, 1998; Coffey, Holbrook & Atkinson, 1996).

- Take precedence over researcher choices (Garcia-Horta & Guerra-Ramos, 2009; Shonfelder, 2011).

- Lull researchers into a false sense confidence about the quality of their work (MacMillan & Koenig; Schwandt, 2007).

The point is that when it comes to the debates between critics and supporters of QDA Software, a markedly different perception of freedom is in play. While critics often frame QDA Software with the language of determinism, the advocates often frame it with the language of constructivism. To the former, the software limits personal agency by standardizing processes; to the latter, the software expands options and promotes diversity.

For their scholarship on Robert Moses, Caro received a Pulitzer and Winner has been cited widely. But, suspicious of some of the characterizations of Moses, Bernward Joerges (1999) carefully examined the historical details and he presents us with another picture. The regional planner who claimed that Moses purposely built the low bridges to limit access did so almost 50 years after the event, and through his own deduction after measuring the bridge height. Next, Kenneth Jackson, a historian and editor of the New York encyclopedia, repeatedly had his students research some of the themes and episodes in Caro’s thirteen hundred page biography and found that many were “doubtful or tendentious.” Most tellingly, correspondence with US civil engineers noted that commercial traffic such as busses and trucks was prohibited from such roads anyway, and so building more than 200 unnecessarily high bridges would have been fiscally irresponsible. Finally, Moses constructed the Long Island Expressway alongside the parkways which didn’t restrict commercial traffic and could provide access to the beaches in any vehicle.

So, what are we to make of these different characterizations of Robert Moses and what do they have to do with determinism, constructivism and the scholarship about QDA Software?

Thomas Gieryn (1983; 1999) initially introduced boundary work as the activity among scholars who purposefully attempted to demarcate science from non-science. Gieryn argued that boundary work among scientists was part of an ideological style that functioned to promote a public image of some ways of knowing over others and was a key factor in the ascendance of the scientific method. He argued that this boundary work could occur in any discipline, including history (such as my description of the bridges of Robert Moses), and research (such as my descriptions of QDA Software). What I am pointing to, as part of my conclusion, is that most scholars view the bridges of Robert Moses as the contested entity; the bridges are the artifacts that either liberate human behavior on one side by facilitating agency or controlling human behavior on the other by limiting it.

However, as Joerges (1999) notes in his assessment of the many different ways the bridges have been described for different purposes, the artifact is the telling of the story, not the bridges, themselves. The bridge story has been used over and over because it’s handy. Because it tells well. Because, in my rendering of it, the telling of the bridge story is an allegory of the many deterministically-laden critiques of another artifact: QDA Software. Together these critiques of QDA Software amount to a one-sided, inaccurate view of how the software limits agency and limits we who use it, and it is a story told over and over as part of professional boundary work by those who critique it; just as we use constructivist language to promote it.

But as a QDA Software expert, I am aware of our own boundary work in the way we sometimes talk of qualitative researchers who do not use QDA Software. Unflattering descriptions like:

- Rigid

- Luddites

- Lazy

- Afraid

- Behind the times

- Wildly uninformed about the capabilities of the software

In contrast, most of them would probably use the same constructivist language to define their own work as we do: Flexible, diverse, etc.

As a larger community of qualitative researchers, we often use the language of determinism to critique the “other,” by invoking fairly simplistic cause-and-effect characterizations such as the unflattering ones I just unleashed. This is a form of boundary work that both camps use to allegedly protect the values of freedom, diversity and individual agency against the oppressive, homogenizing other. After all, that is, collectively, what we qualitative researchers tend to do when we perform boundary work with quantitative researchers. It is a strategy we’ve honed over the years to help demarcate and protect our approach to knowledge.

So, you see, the lesson regarding these determinist versus constructivist discourses may be less about epistemologies and more about the language we use to engage in boundary work: To other-ize each other. This has never lead to any positive, innovative changes in practice in any field, and it won’t in ours either. Our challenge is to find new avenues for scholarship that minimize the boundary work and maximize the collaborative work.

References

Agar, M. (1991). The right brain strikes back. In Using computers in qualitative research. Eds N. G. Fielding & R. M. Lee. 181-194 (Ch 11)

Barry, C. A. (1998). Choosing qualitative data analysis software: Atlas/ti and Nud*ist compared. Sociological Research Online, 3(3).

Bourdieu, P. (1977). Outline of a theory of practice. New York: Cambridge University Press. Translated by Richard Nice.

Caro, R. A. (1974). The Power Broker. New York: Knopf

Coffey, A., Holbrook, B., & Atkinson, P. (1996). Qualitative data analysis: Technologies and representations.

Sociological Research Online, 1(1), http://www.socresonline.org.uk/1/1/4.html

Garcia-Horta, J. B., & Guerra-Ramos, M. T. (2009). The use of CAQDAS in educational research: Some advantages, limitations and potential risks. International Journal of Research & Method in Education, 32(2), 151-165.

Gieryn, T. F. (1999). Cultural boundaries of science: Credibility on the line. Chicago: The University of Chicago Press.

Gieryn, T. F. (1983). Boundary-work and the demarcation of science from non-science: Strains and interests in professional interests of scientists. American Sociological Review, 48. 781-795.

Henderson, K. (1999). On line and on paper: Visual representations, visual culture, and computer graphics in design engineering. Cambridge: MIT Press.

Hinchliffe, S. J., Crang, M. A., Reimer, S. M., & Hudson, A. C (1997). Software for qualitative research: 2. Some thoughts on ‘aiding’ analysis. Environment and Planning A, 29(6), 1109 – 1124.

Joerges, B. (1999). Do politics have artifacts? Social Studies of Science, 29(3), 411-431.

MacMillan, K. & Koenig, T. (2004). The wow factor: Preconceptions and expectations for data analysis software in qualitative research. Social Science Computer Review, 22(2), 179186.